Part of v2.7.0 · Chapter 4: Content & Growth

Ask PrismBuilding Ask Prism: AI Components and Chain of Thought Visualization

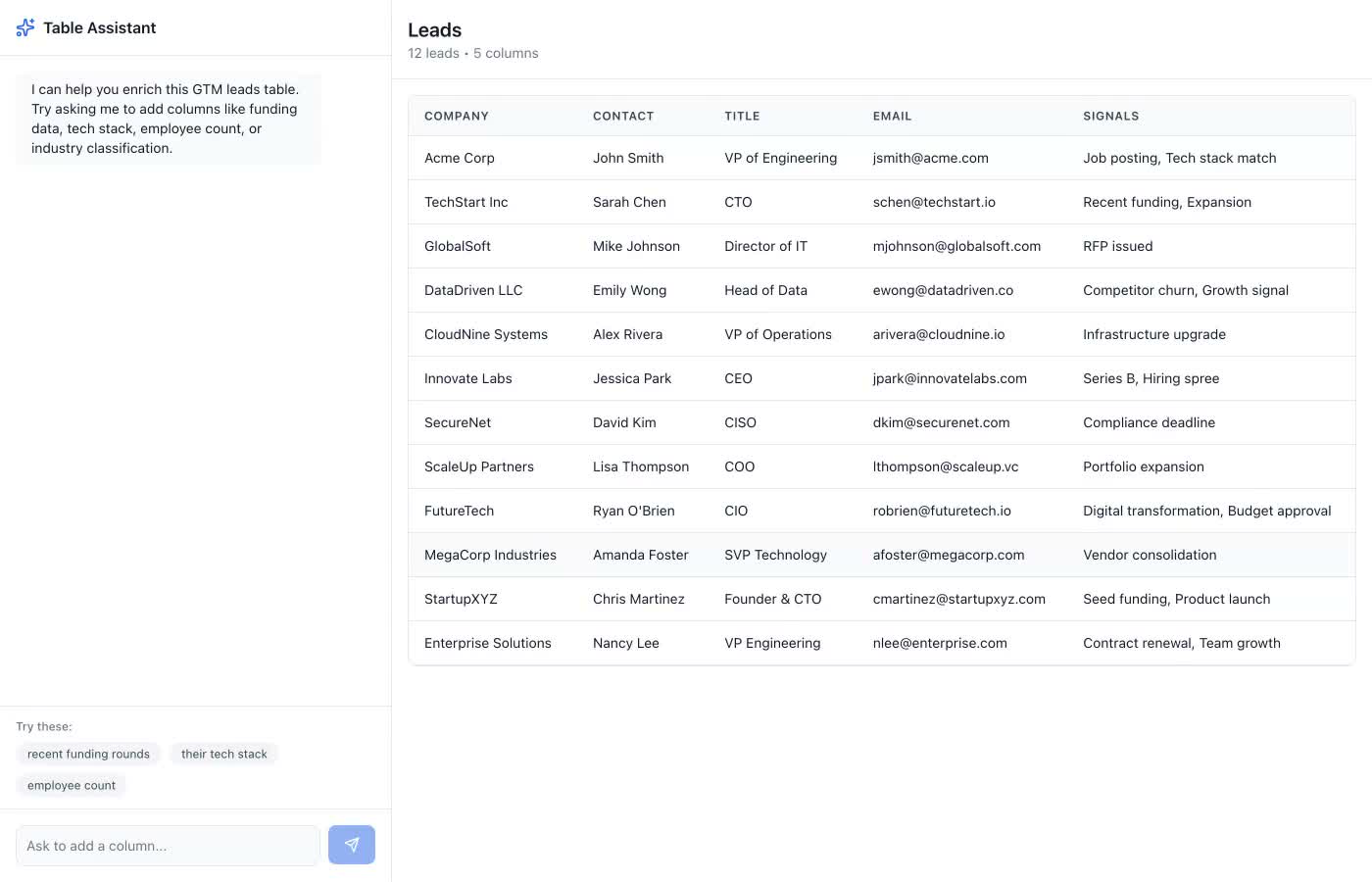

I was building a document Q&A tool when I ran into a wall. The AI worked great—it could answer questions about uploaded PDFs with impressive accuracy. But every time I demo'd it to someone, I got the same reaction: a slight squint, a pause, then "but how do I know it's not making this up?"

That question changed everything.

The Waiting Game

Picture this: you upload a 50-page contract, type a question, and hit enter. Then... nothing. A spinning circle. Maybe a "Processing..." message. Five seconds pass. Ten. Is it working? Is it stuck? Did it crash?

I watched testers do this over and over. They'd wait a few seconds, then start clicking around. Refresh the page. Try again. The AI was actually working fine—it just wasn't showing its work.

This is the curse of the black box. Modern AI can do remarkable things, but if users can't see what's happening, they don't trust it. And if they don't trust it, they won't use it.

I realized the product wasn't a document Q&A tool. It was a trust problem wearing a document Q&A costume.

The Moment of Clarity

The breakthrough came from an unlikely place: watching someone use ChatGPT. When it introduced that little "Thinking..." indicator with the collapsing steps, I noticed something change in how people interacted with it. The interface was showing process, not just result.

That's when it clicked. What if the AI didn't just give you an answer, but showed you exactly how it arrived there? Not in some abstract "here's my confidence score" way, but visually—step by step, in real time.

I started sketching what this might look like. Analyzing your question. Searching the documents. Finding relevant passages. Generating the answer. Verifying accuracy. Each step visible, each step building confidence.

Seeing the Thinking

The chain of thought display became the heart of Ask Prism. When you ask a question, you watch the AI work through its reasoning.

Each step shows what's happening in plain language. "Analyzing query" tells you it understood what you asked. "Vector search" reveals it's looking through your documents. "Verification" shows it's double-checking its work. The little checkmarks accumulate, and by the time the answer appears, you've already seen the rigor behind it.

The magic is in the details. Active steps show a subtle spinner. Completed steps get a checkmark. When everything finishes, the whole panel gracefully collapses—the work is done, and the UI cleans up after itself.

This isn't just eye candy. Testers stopped asking "how do I know it's not making this up?" They could see how. The five seconds of processing that used to feel like uncertainty now felt like thoroughness.

Try the interactive demo in Storybook to see the animation in action.

The Clickable Truth

But showing the thinking wasn't enough. I wanted to go further: let users verify for themselves.

When Ask Prism gives you an answer, it includes citations—little numbered markers that reference specific passages in your documents. Here's where it gets interesting: click one of those citations, and the PDF viewer scrolls directly to that passage. Not just to the page, but to the exact spot, highlighted with a colored box around the relevant text.

It's the difference between "trust me" and "here, check for yourself."

The first time I showed this to a tester, they clicked a citation, watched the PDF jump to the highlighted passage, read it, and said "oh, that's actually what it says." The skepticism evaporated. They weren't trusting the AI anymore—they were verifying it, which is even better.

Trust Through Redundancy

One more layer of paranoia: what if the AI is wrong even when it sounds confident? Document analysis in professional settings demands reliability. A wrong answer about a contract clause could have real consequences.

So I built in a verification step. After the primary model generates an answer, a second model reviews it. If they disagree, a third model mediates. The chain of thought display shows all of this happening—users see "Verifying accuracy" and "Cross-checked with Claude" right there in the steps.

This multi-model approach catches hallucinations before users ever see them. The research backs this up: dual verification significantly improves accuracy over single-model approaches. The extra few seconds of processing time is worth it when accuracy matters.

What This Taught Me

Building Ask Prism started as an AI project and ended as a UX project. The technical challenge—RAG pipelines, vector search, PDF parsing—was just the foundation. The real work was in the interface: making the invisible visible.

Three principles emerged that I'll carry forward:

Transparency beats speed. Users would rather wait five seconds with visibility than three seconds in the dark. The processing time didn't change, but the experience of that time transformed completely.

Verification beats trust. Don't ask users to believe you. Give them tools to check for themselves. Clickable citations turn skeptics into believers more reliably than any reassurance.

Show the work, then clean up. The chain of thought display shows everything during processing, then gracefully collapses when done. It's there when you need it, invisible when you don't.

The components I built for Ask Prism—the chain of thought display, the citation system, the step indicators—ended up being reusable. I've ported the chain of thought component to this portfolio's design system, where you can interact with it and see how it works.

What started as a document Q&A tool became something more: a template for how AI interfaces should communicate with the humans using them.

Links: